通过k8s安装pgsql

介绍通过deploy、svc、pv、pvc部署pgsql。单机版用于学习,生产环境推荐使用集群模式,支持高可用。

单机版

单机版仅供学习、练习用,生产环境尽量不要单机部署。

此次部署使用pv方式做持久化存储,通过cm注入配置

cm.yaml

1 | apiVersion: v1 |

pv.yaml

1 | kind: PersistentVolume |

deployment.yaml

1 | apiVersion: apps/v1 |

svc.yaml

1 | apiVersion: v1 |

启动

1 | kubectl apply -f cm.yaml |

通过golang使用GORM连接

官方文档

引用

1 | "gorm.io/driver/postgres" |

连接

1 | dsn := "host=192.168.41.249 user=postgresadmin password=admin12345 dbname=gorm port=32759 sslmode=disable TimeZone=Asia/Shanghai" |

高可用

逻辑复制

从PostgreSQL 10(以下简称PG)开始,PG支持逻辑复制能力,可实现仅复制部分表或PG服务器上的部分database。逻辑复制的一大优点是支持跨版本间复制,也不需要主从节点的操作系统和硬件架构相同。例如,我们可以实现一台Linux服务器上的PG 11和Windows服务器上的PG 10之间的复制;通过逻辑复制还可以实现不停服的数据库版本更新。

集群

集群高可用方案比较多,这里选择stolon over kubernetes实现

官方文档:https://github.com/sorintlab/stolon

功能

- 利用 PostgreSQL 流式复制。

- 适应任何类型的分区。在尝试保持最大可用性的同时,它更喜欢一致性而不是可用性。

- kubernetes 集成让您实现 postgreSQL 高可用性。

- 使用 etcd、consul 或 kubernetes API server 等集群存储作为高可用数据存储和 leader 选举。

- 异步(默认)和同步复制。

- 在几分钟内完成集群设置。

- 轻松简单的集群管理。

- 可以与您首选的备份/恢复工具集成进行时间点恢复。

- 备用集群(用于多站点复制和接近零停机时间的迁移)。

- 自动服务发现和动态重新配置(处理 postgres 和 stolon 进程更改其地址)。

- 可以使用 pg_rewind 与当前 master 进行快速实例重新同步。

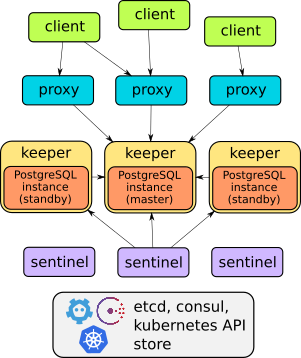

stolon架构

Stolon 由 3 个主要部分组成

keeper:他负责管理PostgreSQL的实例汇聚到由sentinel(s)提供的clusterview。sentinel:负责发现并且监控keeper,并且计算最理想的clusterview。proxy:客户端的接入点。它强制连接到正确的PostgreSQL的master节点并且强制关闭连接到由非选举产生的master。

Stolon 用etcd或者consul作为主要的集群状态存储。

对应部署文档:

https://github.com/sorintlab/stolon/blob/master/examples/kubernetes/README.md

这里选择keeper节点2个,proxy节点1个,sentinel节点1个

1 | base-stolon-keeper-0 1/1 Running 1 19h |

由于keeper是通过本地持久化存储数据,测试高可用场景主要在本地数据无法访问时是否保证数据可访问。在k8s上,sentinel和proxy都是无状态服务,当节点宕机,k8s上会自动漂移到其他节点,因此,高可用测试主要集中在keeper节点上。在集群规模比较大时,多个proxy节点和多个sentinel能够提高访问性能和集群管理性能。

store-backend 为 kubernetes api server

1 | stolonctl --cluster-name stolon-cluster --store-backend=kubernetes --kube-resource-kind=configmap init |

进入proxy的pod,通过命令行连接数据库

1 | psql -h 127.0.0.1 -d postgres -U datatom |

生成测试数据库,测试数据表,测试数据

1 | -- 创建数据库 |

查看主节点

pg_stat_replication是一个视图,主要用于监控PG流复制情况。在这个系统视图中每个记录只代表一个slave。因此,可以看到谁处于连接状态,在做什么任务。pg_stat_replication也是检查slave是否处于连接状态的一个好方法。

1 | mitaka=# -- 打开扩展显示 |

人们经常说 pg_stat_replication 视图是primary 端的,这是不对的。该视图的作用是揭示有关wal sender 进程的信息。换句话说:如果你正在运行级联复制,该视图意味着在 secondary 复制到其他slaves 的时候, secondary 端的 pg_stat_replication 上的也会显示entries ( 条目 )

只能通过proxy连接,而且只能连接到master

在master上无法查看到从库wal日志接收状态:

1 | mitaka=# select * from pg_stat_wal_receiver; |

通过命令行获取pgsql集群状态

1 | stolonctl --cluster-name=base-stolon --store-backend=kubernetes --kube-resource-kind=configmap status |

集群信息

1 | stolonctl --cluster-name=base-stolon --store-backend=kubernetes --kube-resource-kind=configmap clusterdata read |

1 | { |

重启keep0

1 | kubectl delete pod base-stolon-keeper-0 |

或者

1 | 获取 docker id |

查看集群状态

1 | === Active sentinels === |

再写入 100w 数据

1 | insert into people |

将keep1缩容,模拟keep1关机

1 | kubectl scale --replicas=1 statefulset.apps/base-stolon-keeper |

1 | NAME READY STATUS RESTARTS AGE |

查看集群状态

1 | === Active sentinels === |

再次查看数据,第一次执行会报错,说明proxy在这个过程中发生切换,再次执行可以获取结果

1 | select * from pg_stat_replication; |

再次写入数据,然后启动keep1,模拟是否发送脑裂

1 | kubectl scale --replicas=2 statefulset.apps/base-stolon-keeper |

1 | === Active sentinels === |

数据正常。

推荐阅读:

《PostgreSQL从入门到不后悔》

PostgreSQL高可用stolon